Generating Test Scripts by OpenAPI Test Generator

1. Overview

The OpenAPI Test Generator is a powerful feature within Testany designed to automate the creation of comprehensive API test scripts directly from your OpenAPI (OpenAPI) specifications. By leveraging this feature, users can significantly accelerate their API testing efforts, ensure consistent test coverage, and reduce the manual effort required for initial test case development.

Key Benefits:

Rapid Test Script Generation: Automatically creates a suite of test scripts (including positive, positive boundary and various negative scenarios) based on your API definition, dramatically speeding up the test creation process.

Improved Test Coverage: Generates tests covering different aspects of your API endpoints (e.g., valid requests, invalid data types, missing required fields, boundary conditions) as defined in the OpenAPI spec.

Consistency and Standardization: Ensures test scripts adhere to a standardized structure and methodology, promoting maintainability and collaboration within testing teams.

Reduced Manual Effort: Minimizes the need for manual test script writing for initial API validation, allowing testers to focus on more complex scenarios and exploratory testing.

Integration with Git: Stores generated test scripts directly in a specified Git repository, facilitating version control, code reviews, and integration into CI/CD pipelines.

2. Prerequisites

Before you can successfully generate test scripts from your OpenAPI specification using Testany, please ensure the following prerequisites are met:

2.1 Create Git Repository Credentials

To enable Testany to interact with your Git repository for both reading the OpenAPI specification (if hosted there) and writing the generated test scripts, you will need to configure two types of Git access:

Read-only Access: Required if your OpenAPI specification file is hosted in a private Git repository and you choose the "URL Import" method during generation. This access allows Testany to securely fetch and read the specification file.

Write Access: Required for Testany to commit and push the automatically generated test scripts and associated environment files into the designated branch of your target Git repository.

To provide Testany with the necessary Git access, you must generate a Personal Access Token within your Git provider (Bitbucket, GitHub, etc.). It is highly recommended to store these tokens securely in a dedicated secret management system like:

Azure Key Vault

AWS Secrets Manager

👉 Reference:

Once the token is created, request your workspace admin to register the credential in Testany.

👉 Reference: Managing Test Credential

2.2 Ensure OpenAPI Specification Quality

It is strongly recommended that before uploading or importing your OpenAPI specification into Testany, it‘s been audited by an API security and quality tool (such as 42Crunch) to ensure its quality and adherence to best practices. Specifically, strive for a high security score (ideally full marks) and a data validation score above 40 points. Auditing the spec beforehand helps ensure the generated tests are based on a well-defined and secure API contract, leading to more robust and successful test script generation.

2.3 Identify the Git Branch and Generation Record Owner

Before initiating the test script generation process, you need to make two key decisions:

Target Git Branch: You must specify the name of the Git branch where the generated test scripts will be committed. It is strongly recommended to set a new and dedicated branch specifically for this generation process. This practice ensures reliable test execution and prevents accidental overwriting of existing code on stable branches. DO NOT use any existing branches unless you specifically intend to overwrite their contents with the generated scripts. Testany will create the branch if it does not exist.

Generation Record Owner(s): Decide which specific APIs defined in the OpenAPI specification you intend to generate tests for and who within your team will be responsible for maintaining the generated test scripts and the corresponding generation record in Testany. Assigning designated owner(s) to each generation record ensures proper accountability and facilitates future updates or troubleshooting.

3. Steps-by-steps Guide

Follow these steps to generate API test scripts from your OpenAPI specification using Testany.

3.1 Pre-steps: Accessing the Generator

Begin by accessing the 'Generate from OpenAPI' wizard within the Testany interface:

Navigate to the 'Test Case Library' section.

Click the '↓' arrow icon located next to the 'Register test case' button.

From the dropdown menu, select 'Generate from OpenAPI'.

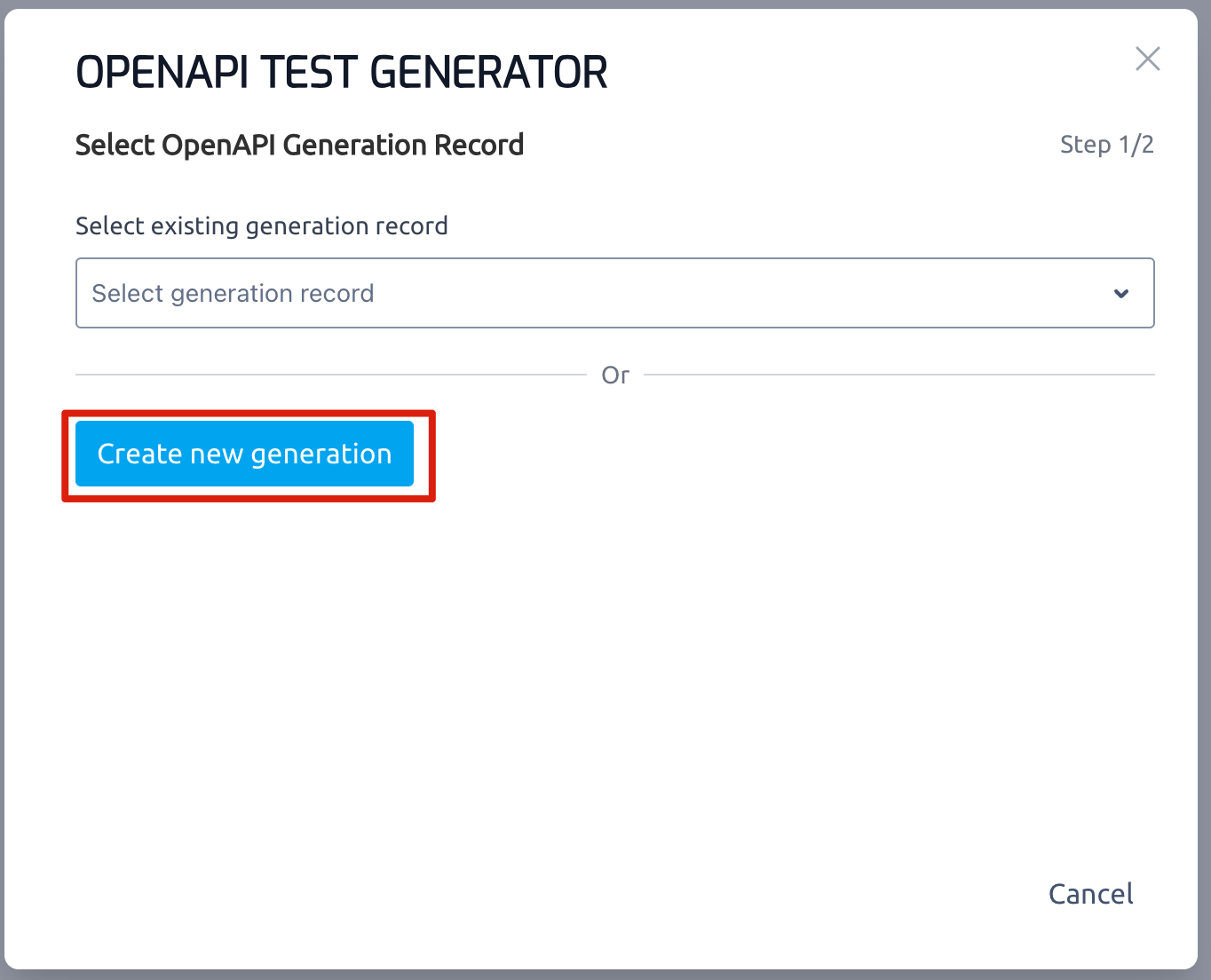

3.2 Create a New Generation Record

In the 'Step 1/2' of the generator wizard, you will typically select whether to create a new generation or update an existing one. To start fresh, click 'Create new generation'.

3.2.1 Configure generation

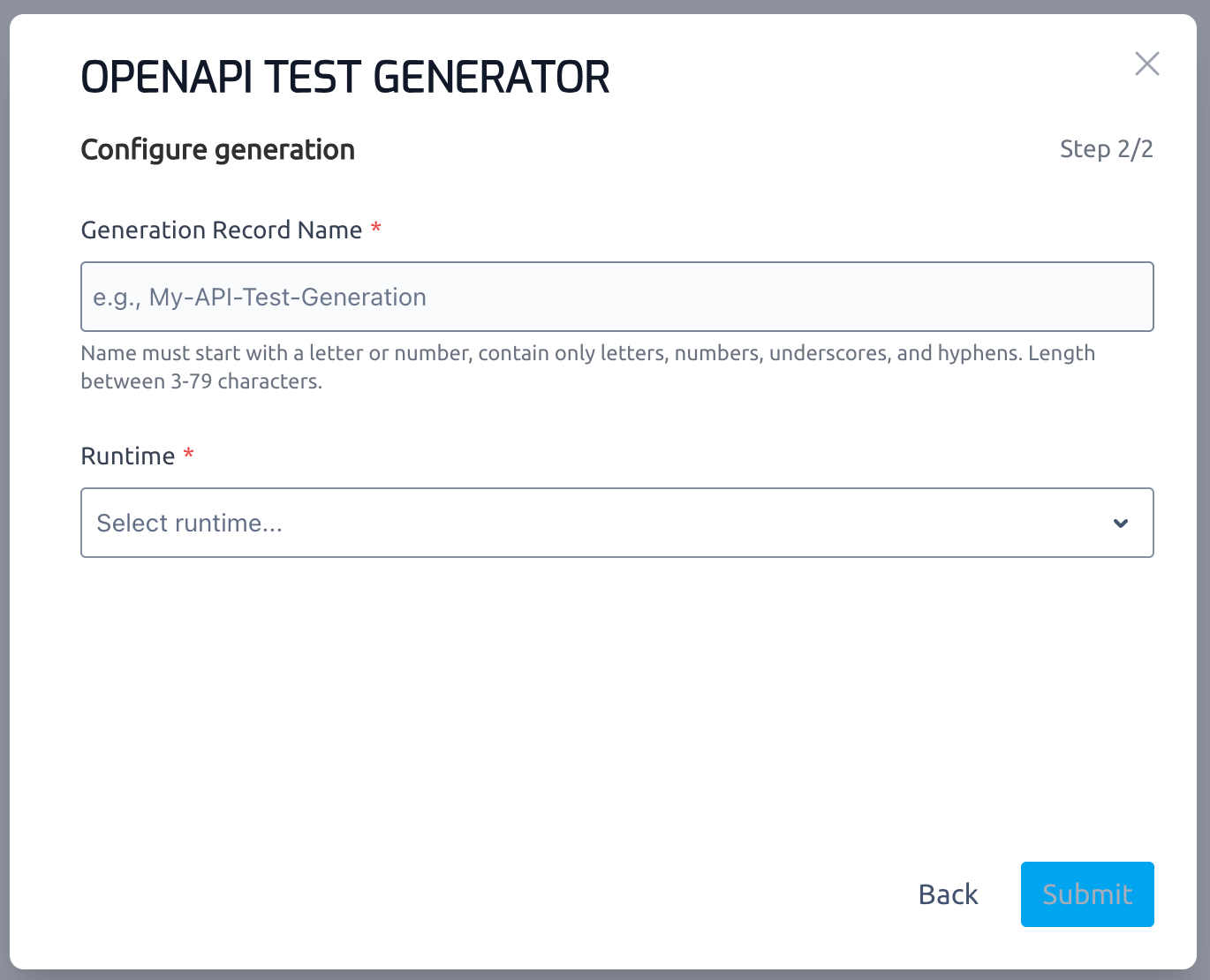

In 'Step 2/2' of generator wizard, configure the detailed settings for the generation and then click 'Submit'.

3.2.1.1 Basic Information

Provide essential information for the generation record:

Name: Enter a unique and descriptive name for this generation record. This name helps identify the record later. Please note that the name cannot be changed after the generation is submitted.

Runtime: Expand the dropdown list and select the target test runtime environment where these scripts will be executed. The available runtimes depend on your Testany configuration.

Click 'Next' to proceed.

Note: If a runtime is currently non-operational, it will be displayed as greyed out in the selection list.

3.2.1.2 OpenAPI Specification

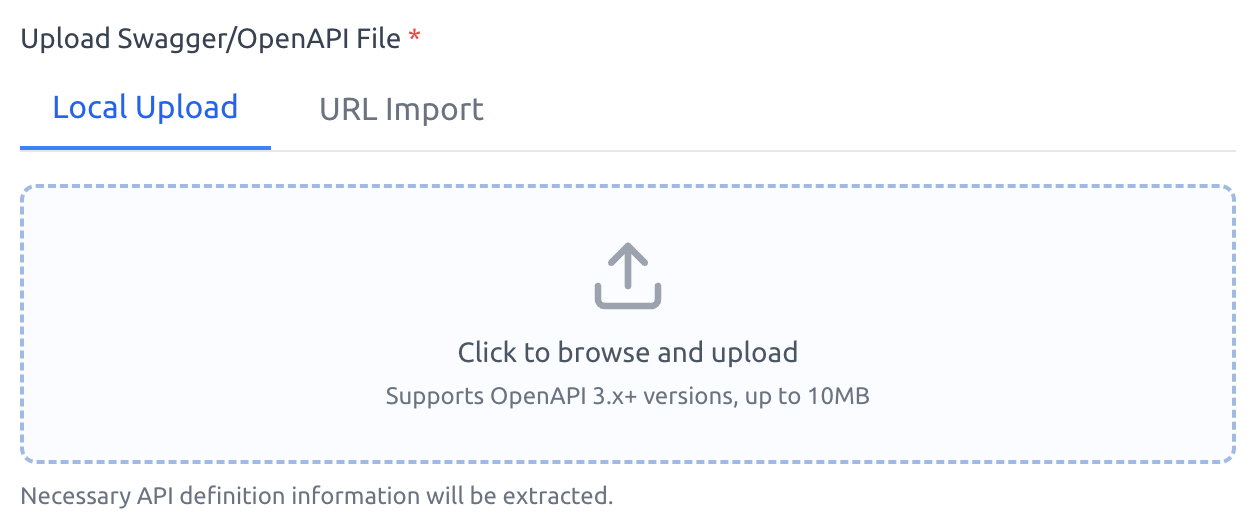

Provide the OpenAPI specification file (versions 3.x and above are supported) to Testany using one of the following methods.

3.2.1.2.1 Local Upload

If you have the OpenAPI specification file stored locally on your computer:

Switch to the 'Local Upload' tab.

Click the designated area to browse and upload your OpenAPI file directly to Testany.

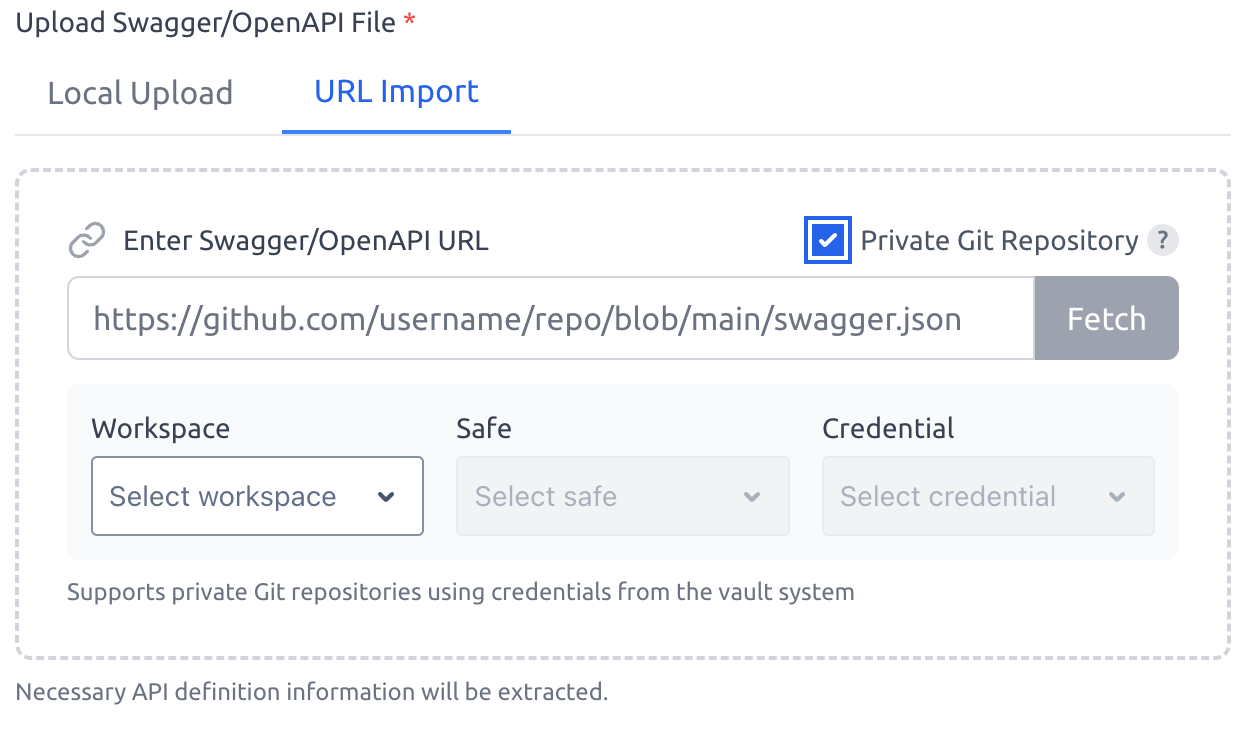

3.2.1.2.2 URL Import

If your OpenAPI specification is accessible via a URL:

Switch to the 'URL Import' tab.

Enter the full URL of the OpenAPI file.

Click 'Fetch' to retrieve the content of the specification.

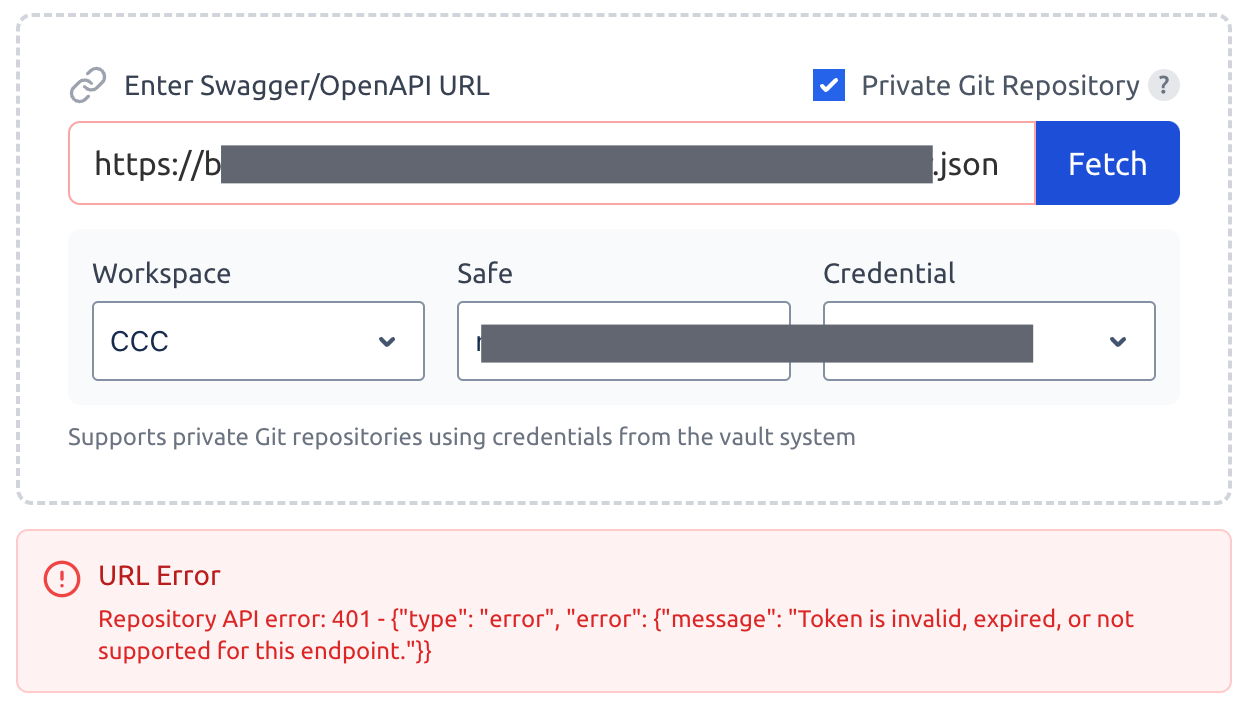

Note: If the OpenAPI file is located in a private Git repository or requires authentication to access the URL, you must provide the appropriate credential with read-only access to that resource. Tick 'Private Git Repository' checkbox and select the pre-configured credential (registered by your admin as per section #2.1) from the dropdown.

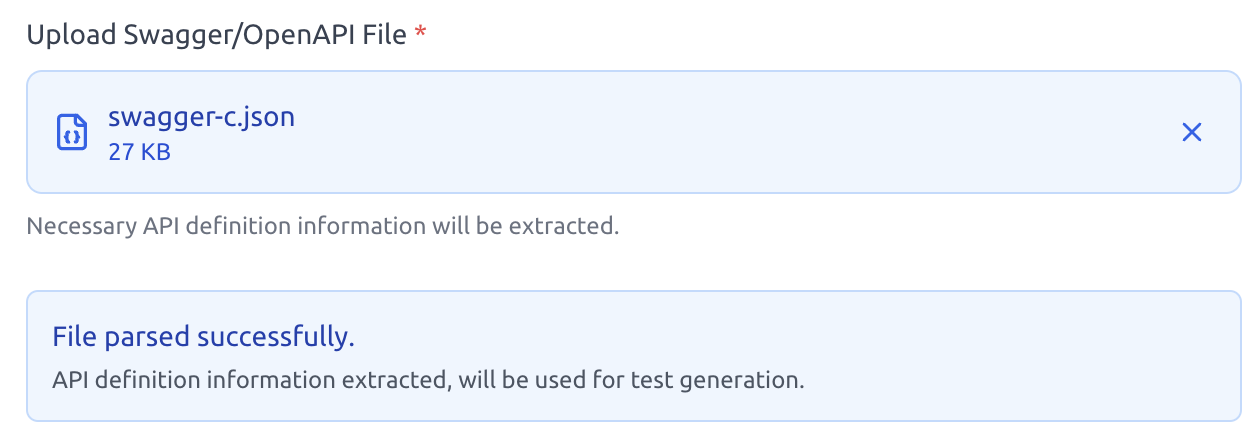

Once the file is successfully parsed by Testany, a confirmation message will be displayed, indicating that the specification was processed correctly.

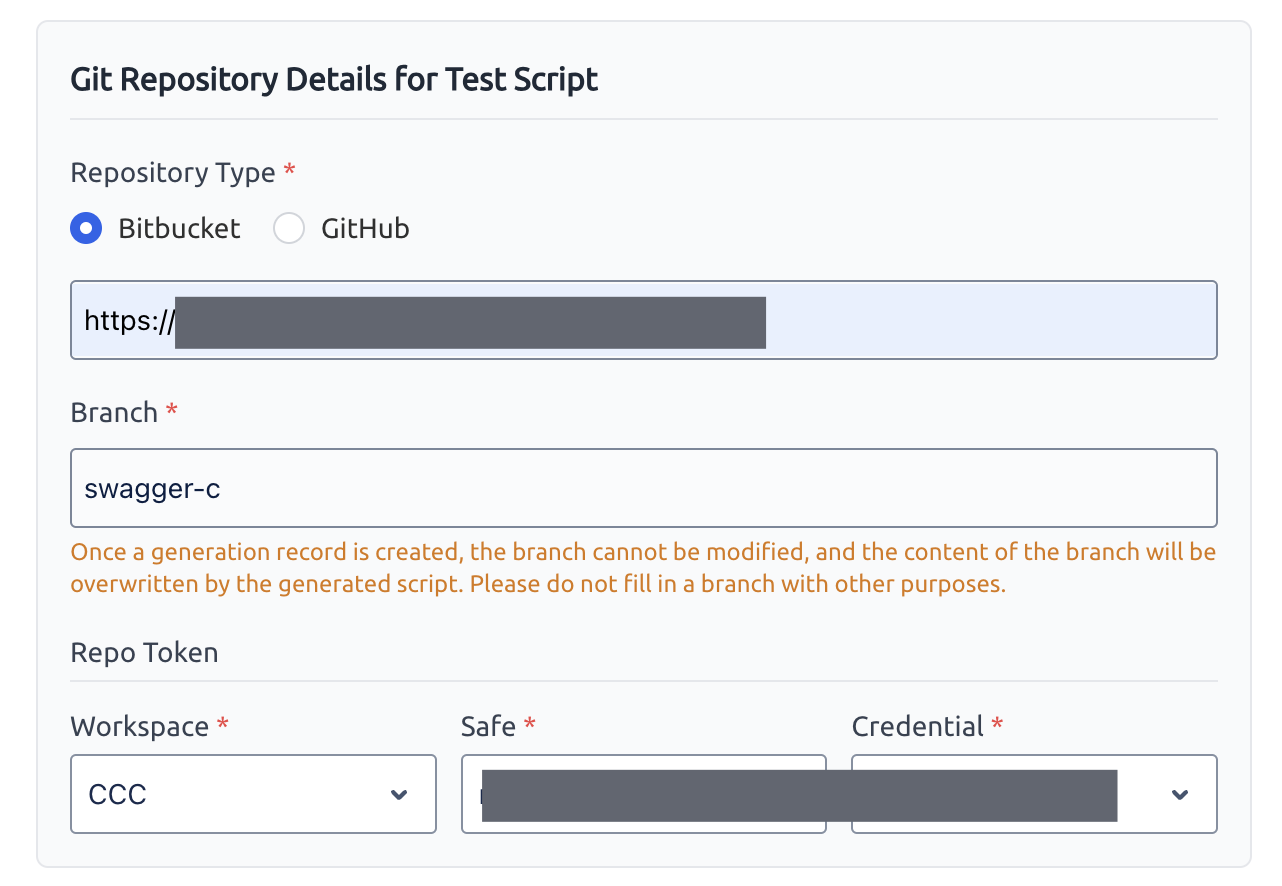

3.2.1.3 Repository Information

Specify the details of the Git repository where the generated test scripts should be stored:

Repository type: Bitbucket or GitHub

Repository URL:

https://bitbucket.org/workspace/repo.gitorhttps://github.com/username/repo.gitBranch: <your_branch_name> ((name can't be changed after generation, see section #2.3)

Repo token: Select the pre-configured git credential with write access to the specified repository (see section #2.1)

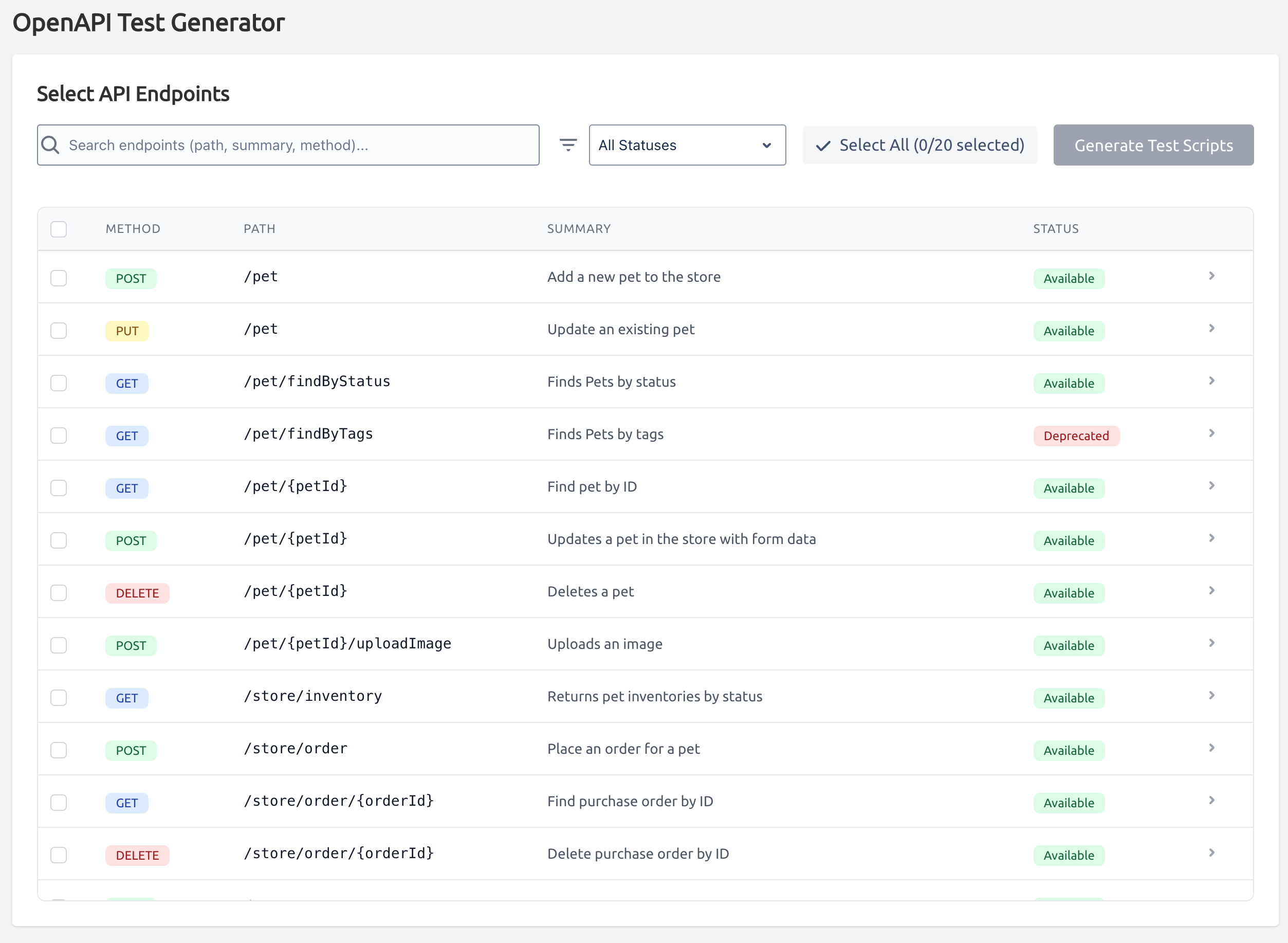

3.2.1.4 Choose Endpoints and Initiate Generation

In the final step of the generator wizard, you will see a list of API endpoints discovered from your OpenAPI specification.

Select the specific API endpoints for which you want to generate test scripts. You can select individual endpoints or all.

Click 'Generate Test Scripts'.

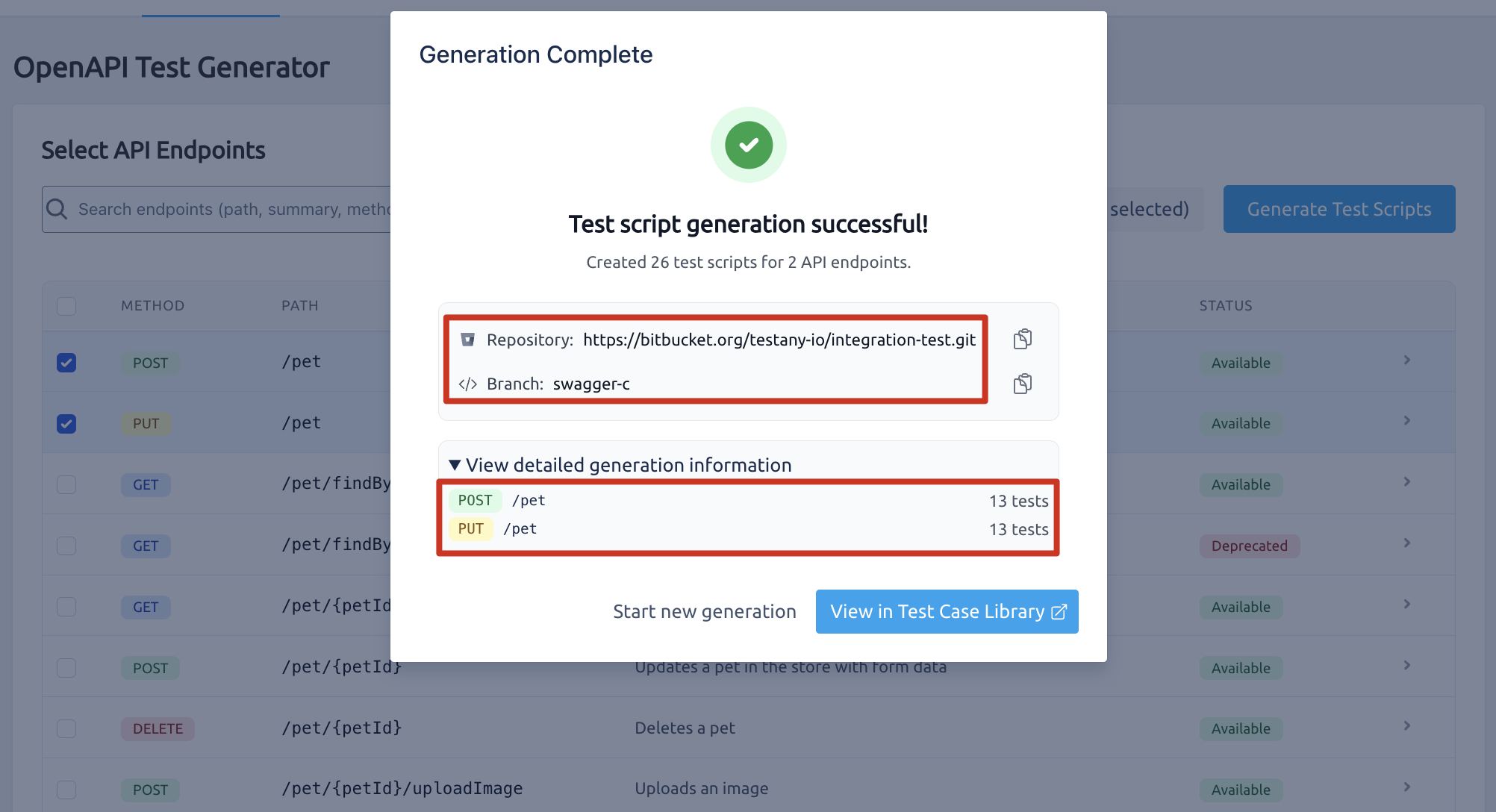

A confirmation popup will appear once the generation process is successfully completed and the generated test scripts are registered within Testany. This popup typically shows:

The Repository URL and branch name that were used for committing the generated scripts.

The number of test files (

*.pyfiles) generated for each selected endpoint.

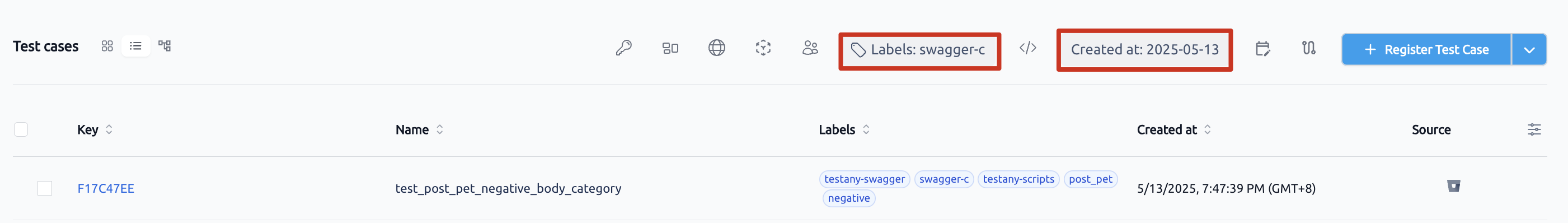

3.2.2 View Registered Test Cases in Testany

Upon successful generation and registration, the newly created test cases will appear in your Test Case Library in Testany. These represent the abstract test cases linked to the generated scripts in your Git repository.

To easily find the registered cases from this specific generation, you can perform a filtered search in the Test Case Library using a combination of labels and criteria:

labels = {your_branch_name}(Testany automatically adds a label corresponding to the target branch name)created_from = {generation_date}(Filter by the date the generation record was created)owner = {user_id}(Filter by the user who initiated the generation)

For more detailed information about the registered test cases, please refer to the guide Bulk Import Test Cases from Git #3.1.9.

3.2.3 View Generated Test Scripts in Git

The core output of the OpenAPI Test Generator is the set of test scripts committed to the specified branch of your Git repository. Testany organizes these scripts in a standardized structure.

3.2.3.1 Branch Overview

Navigate to the specific branch you configured in section #3.2.1.3 within your Git repository. You will find a new directory structure created by Testany (typically at the root of the repository or within a designated subdirectory if configured). The structure is organized per API endpoint:

Testany-scripts/ (Example root directory)

├─ env/ (Contains environment variables/test data files)

│ ├─ {method}_{endpoint_1_url}_environment.txt

│ ├─ {method}_{endpoint_2_url}_environment.txt

│ └─ ...

├─ {method}_{endpoint_1_url}/ (Directory for a specific endpoint, e.g., post_pet_petId)

│ ├─ positive/ (Contains positive test scripts)

│ │ └─ test_{method}_{endpoint_1_url}_positive.py

│ └─ negative/ (Contains negative test scripts)

│ │ └─ test_{method}_{endpoint_1_url}_negative_header_{field}.py

│ │ └─ test_{method}_{endpoint_1_url}_negative_path_{field}.py

│ │ └─ test_{method}_{endpoint_1_url}_negative_query_{field}.py

│ │ └─ test_{method}_{endpoint_1_url}_negative_body_{field}.py

│ │ └─ ... (Other negative scenarios based on schema)

│ └─ positive_boundary/ (Contains positive boundary test scripts)

│ │ ├─ test_{method}_{endpoint_1_url}_positive_boundary_header_{field}.py

│ │ └─ ... (Other positive boundary scenarios based on schema)

├─ {method}_{endpoint_2_url}/

│ └─ ...

└─ ...Example Structure for POST /pet/{petId}:

Testany generates a dedicated directory and files following the naming convention {method}_{endpoint_url}. This structure logically groups test scripts by endpoint and test type (positive/positive boundary/negative), making it easy to navigate and manage the generated code.

Testany-scripts/

├─ env/

│ └─ post_pet_petId_environment.txt (Environment variables/test data for this endpoint)

└─ post_pet_petId/

├─ positive/

│ └─ test_post_pet_petId_positive.py (Positive test script)

└─ negative/

│ ├─ test_post_pet_petId_negative_body_name.py (Negative test for 'name' field in body)

│ ├─ test_post_pet_petId_negative_body_status.py (Negative test for 'status' field in body)

│ └─ test_post_pet_petId_negative_path_petId.py (Negative test for 'petId' in path)

└─ positive_boundary/

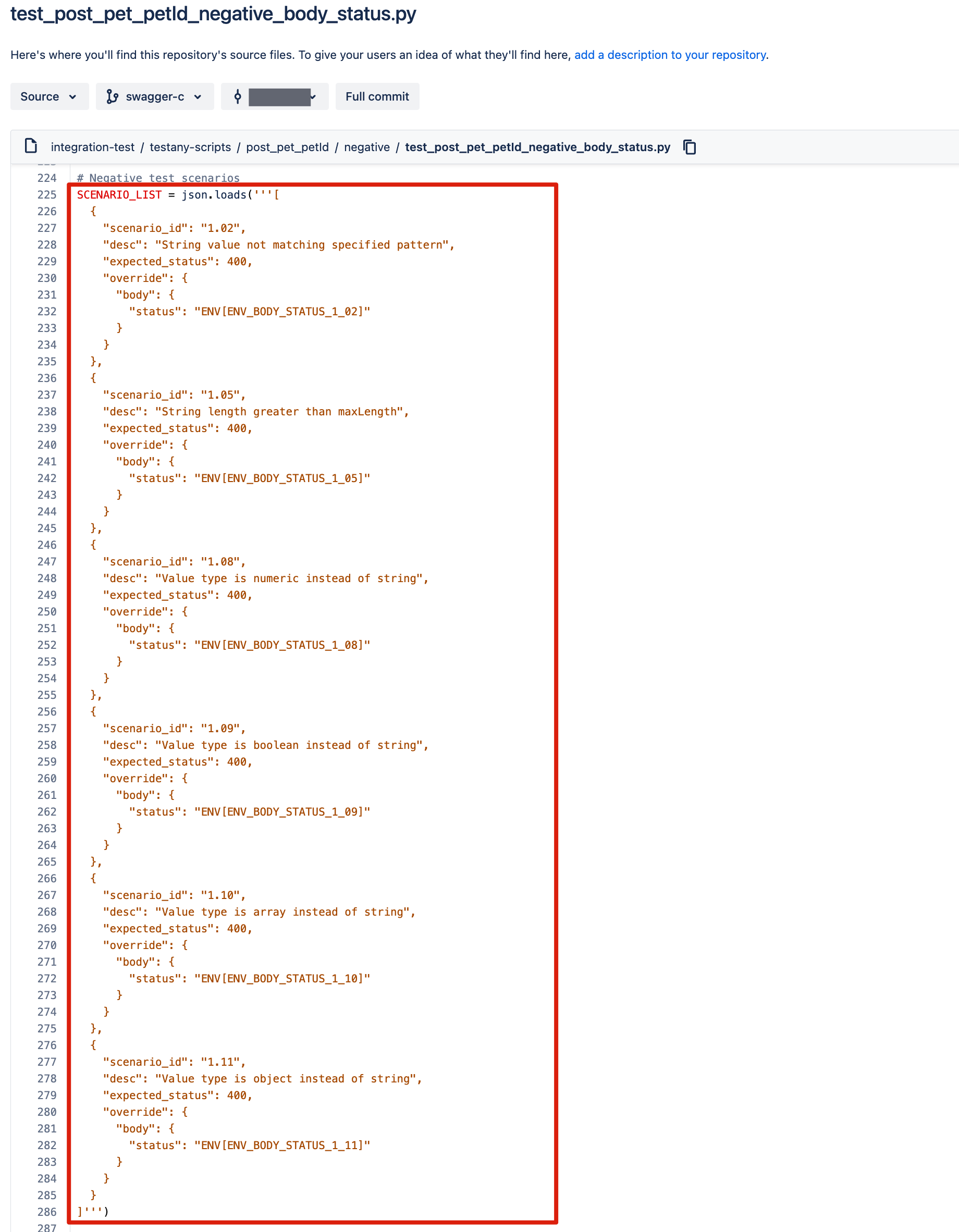

└─ test_post_pet_petId_positive_boundary_body_name.py (Positive boundary test for 'name' field in body)3.2.3.2 Negative test scenarios

Testany-scripts

├─ {method}_{endpoint_1_url}

│ └─ negative

│ ├─ test_{method}_{endpoint_1_url}_negative_header_{field}.py

│ ├─ test_{method}_{endpoint_1_url}_negative_path_{field}.py

│ ├─ test_{method}_{endpoint_1_url}_negative_query_{field}.py

│ └─ test_{method}_{endpoint_1_url}_negative_body_{field}.pyThe negative/ subdirectory for each endpoint contains scripts (.py files) designed to test how the API handles invalid or unexpected input based on the OpenAPI specification's schema definitions. These tests are specifically designed to cover scenarios where the input data or request structure violates the constraints defined in the OpenAPI schema. Such violations typically trigger client-side error responses with 400-level HTTP status codes, rather than server errors or authentication/authorization issues (like 401 Unauthorized or 403 Forbidden). The file names indicate the specific field and location being tested (e.g., _negative_body_status tests the status field within the request body).

Testany's generation process considers various negative scenarios defined by the OpenAPI specification constraints (like minLength, maxLength, format, pattern, enum, nullable, type, required, etc.). For a complete overview of all supported negative test scenarios organized by data type and scenario ID, please refer to Appendix.

3.2.3.3 Positive boundary test scenarios

Testany-scripts

├─ {method}_{endpoint_1_url}

│ └─ positive_boundary

│ ├─ test_{method}_{endpoint_1_url}_positive_boundary_header_{field}.py

│ ├─ test_{method}_{endpoint_1_url}_positive_boundary_path_{field}.py

│ ├─ test_{method}_{endpoint_1_url}_positive_boundary_query_{field}.py

│ └─ test_{method}_{endpoint_1_url}_positive_boundary_body_{field}.pyThe positive_boundary/ subdirectory for each endpoint contains scripts (.py files) designed to test how the API handles input data at the valid boundaries of constraints defined in the OpenAPI specification's schema definitions. These tests are specifically designed to cover scenarios where the input data uses valid values that are exactly at the permitted limits (such as minimum/maximum lengths, minimum/maximum values, etc.). These boundary tests typically result in successful responses with 200-level HTTP status codes when the API correctly handles edge cases within valid ranges. The file names indicate the specific field and location being tested (e.g., _positive_boundary_body_name tests the name field within the request body).

Testany's generation process considers various positive boundary scenarios defined by the OpenAPI specification constraints (specifically minLength, maxLength, minimum, maximum, exclusiveMinimum, exclusiveMaximum). For a complete overview of all supported positive_boundary test scenarios organized by data type and scenario ID, please refer to Appendix.

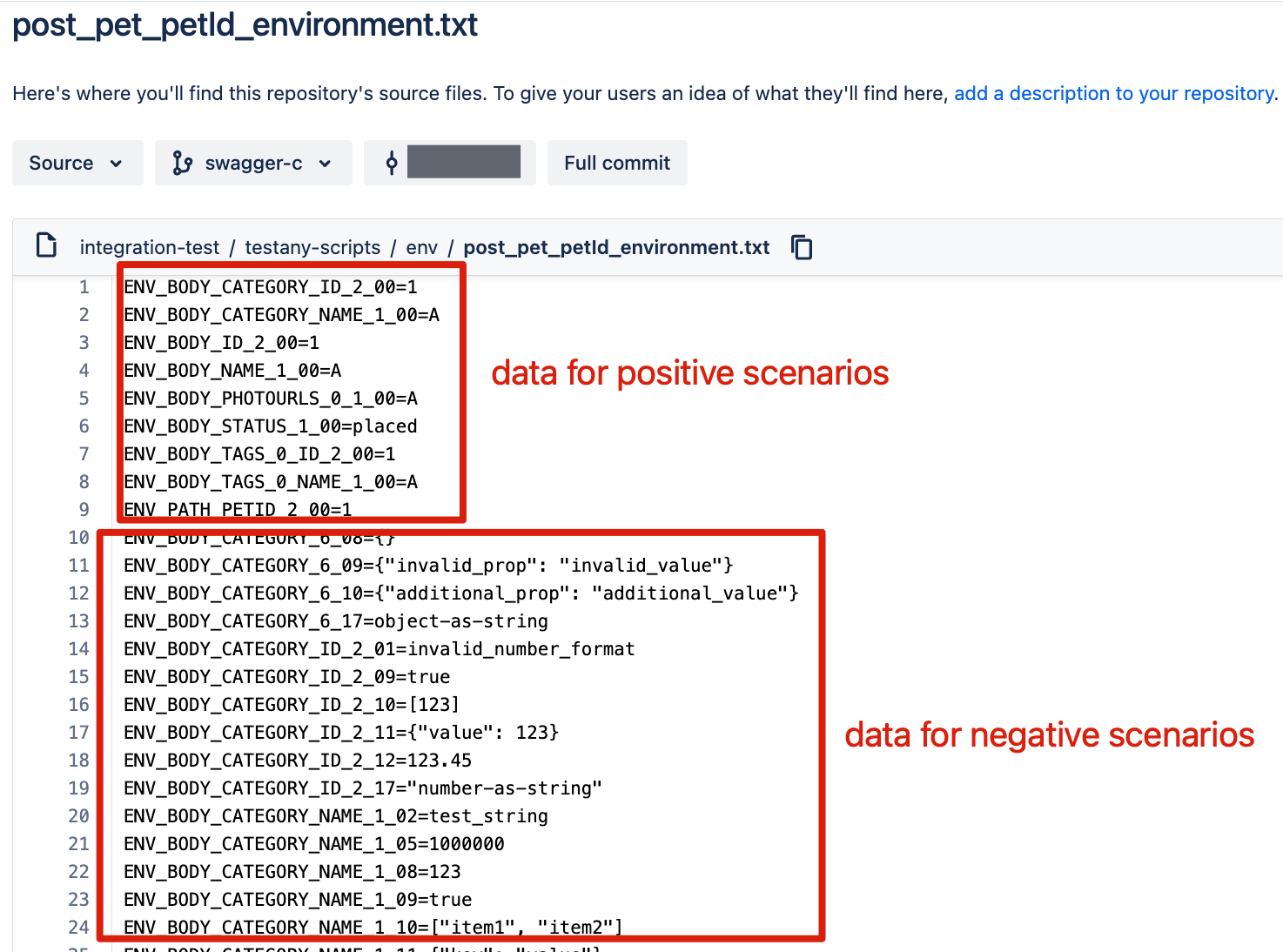

3.2.3.4 Environment variables

The env/ directory contains text files (one per endpoint) named {method}_{endpoint_url}_environment.txt. These files hold the necessary test data and environment-specific variables required for executing both the positive and negative test scenarios generated for the corresponding endpoint.

Testany-scripts/

├─ env/

│ ├─ {method}_{endpoint_1_url}_environment.txt (Data for endpoint 1)

│ ├─ {method}_{endpoint_2_url}_environment.txt (Data for endpoint 2)

│ └─ ...Each {method}_{endpoint_url}_environment.txt file lists the variables (like base URL, specific parameter values for positive cases, invalid values for negative cases) that the generated Python scripts will use during execution.

If you need to modify the generated test data to align with specific business requirements, test environments, or data availability, you should update these .txt files directly within your Git repository. After making changes, commit them and push the updates following your standard Git workflow. Testany will pull the latest version of these environment files from the specified branch when executing these scripts. This applies to all test types including positive, negative, and positive boundary tests.

Example for post_pet_petId_environment.txt:

This file would contain the test data required by test_post_pet_petId_positive.py, all test_post_pet_petId_negative_*.py and all test_post_pet_petId_positive_boundary_*.py scripts under the post_pet_petId/ directory. This includes valid petId values, valid (including boundary values for applicable constraints) and invalid request body data for fields like name and status, and potentially other environment configurations.

3.3 Update an Existing Generation Record

You can update an existing generation record to regenerate test scripts based on a modified OpenAPI specification or to select a different set of endpoints from the same specification file source.

3.3.1 Select a Generation Record to Update

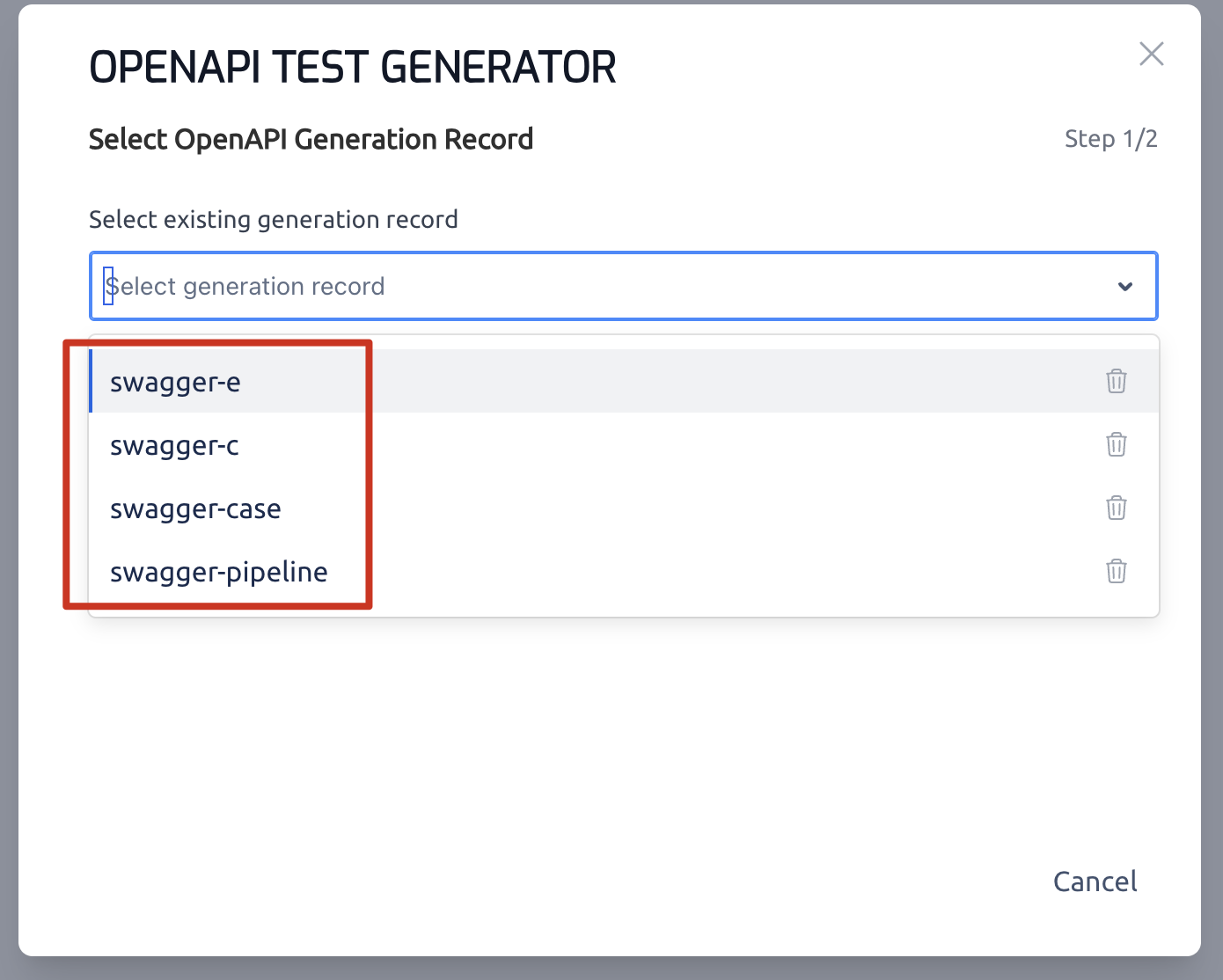

In the 'Step 1/2' of the generator wizard:

Expand the 'generation record' dropdown list.

Select the specific generation record you wish to update and re-generate.

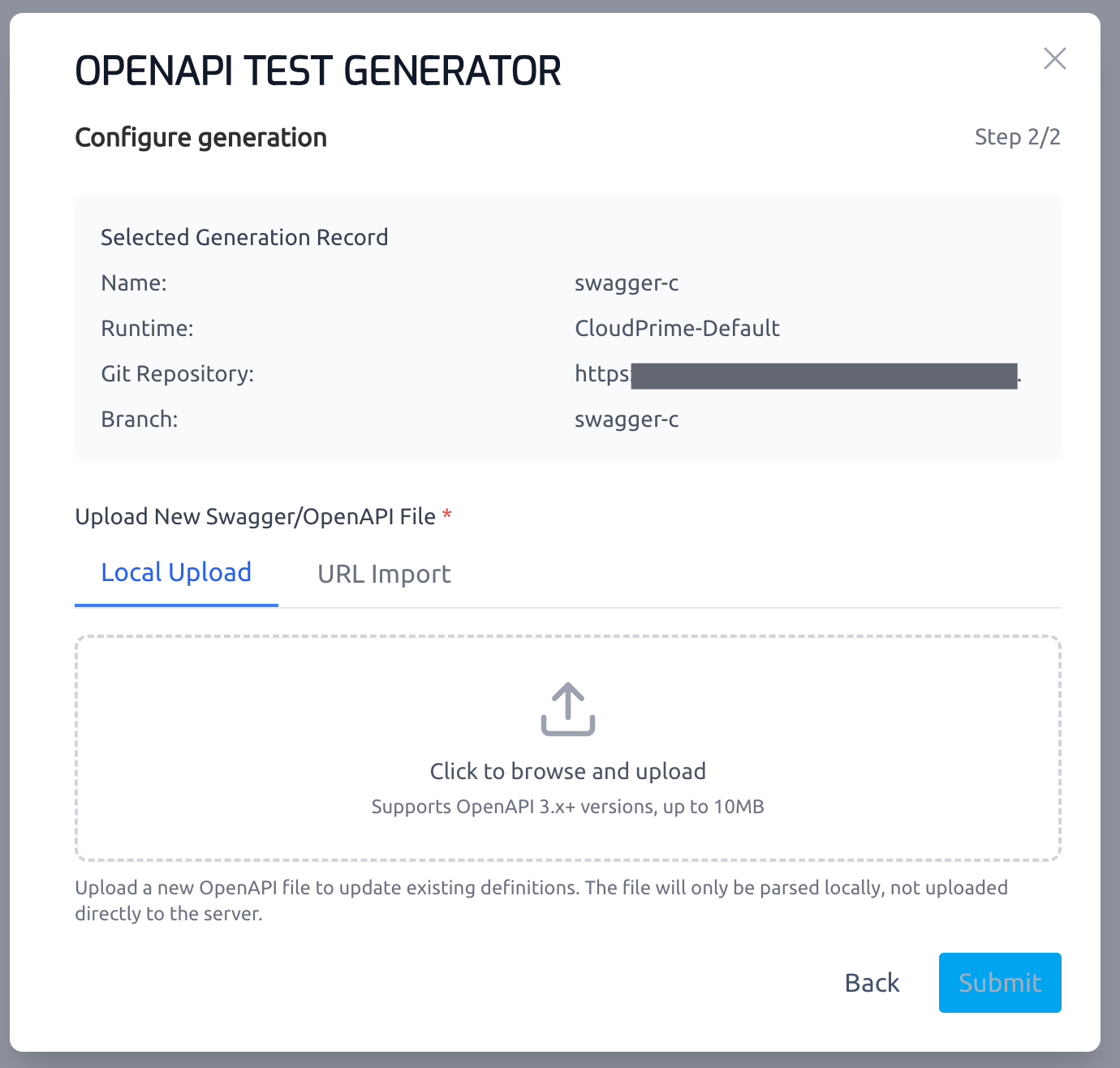

3.3.2 Re-upload or Re-import OpenAPI

After selecting the record, you will proceed to the configuration steps (similar to section #3.2.1). Provide the updated OpenAPI specification file. This can be done by re-uploading a local file or re-importing from a URL.

Click 'Submit' after providing the updated specification.

3.3.3 Choose Endpoints

Proceed to the 'Select API Endpoints' page (similar to section 3.2.1.4). Select the API endpoints for which you want to generate or re-generate test scripts.

Key Notes on Updating:

Overwriting Generated Code: The test scripts and environment files for the selected APIs in the specified Git branch will be re-generated and completely replaced based on the new OpenAPI specification and your endpoint selections. This means the content previously generated for these APIs in that branch will be overwritten.

Branch Overriding: As a direct consequence of point #1, the entire content within the

Testany-scripts/directory on the specific branch associated with this generation record will be updated or replaced based on the new generation output. Any manual changes you made within the previously generated files might be lost if those files are regenerated.Impact on Unselected APIs: If an API endpoint that was selected in the previous generation of this record is manually unselected in this update step, its corresponding registered test case in Testany will be either deleted or unlinked from the Git repository upon completion of the import process. The exact behavior (delete vs. unlink) depends on whether the test case is currently assembled into any test pipelines (see reference below).

Impact of Renamed/Moved APIs: If an API endpoint that was selected in the previous generation is renamed or moved within the re-uploaded OpenAPI specification, Testany will treat it as a new or missing endpoint. It will no longer appear as the same selectable endpoint in the generation wizard for this record. Consequently, its previously registered test case in Testany (linked to the old name/path) will be either deleted or unlinked upon completion of the process (see reference below).

For details on how test cases are handled when the underlying Git source changes, refer to Import Test Cases from Git #3.3.2.

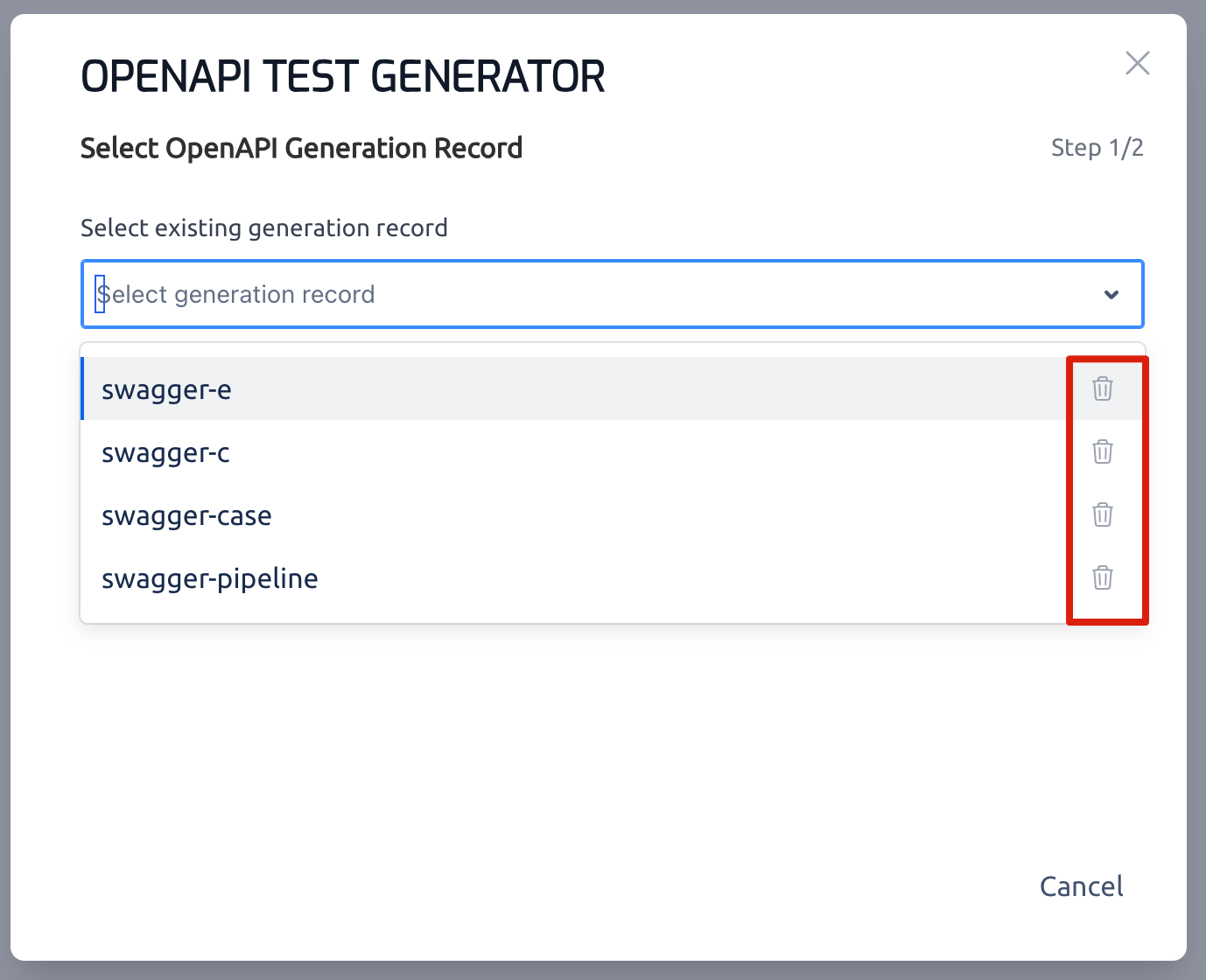

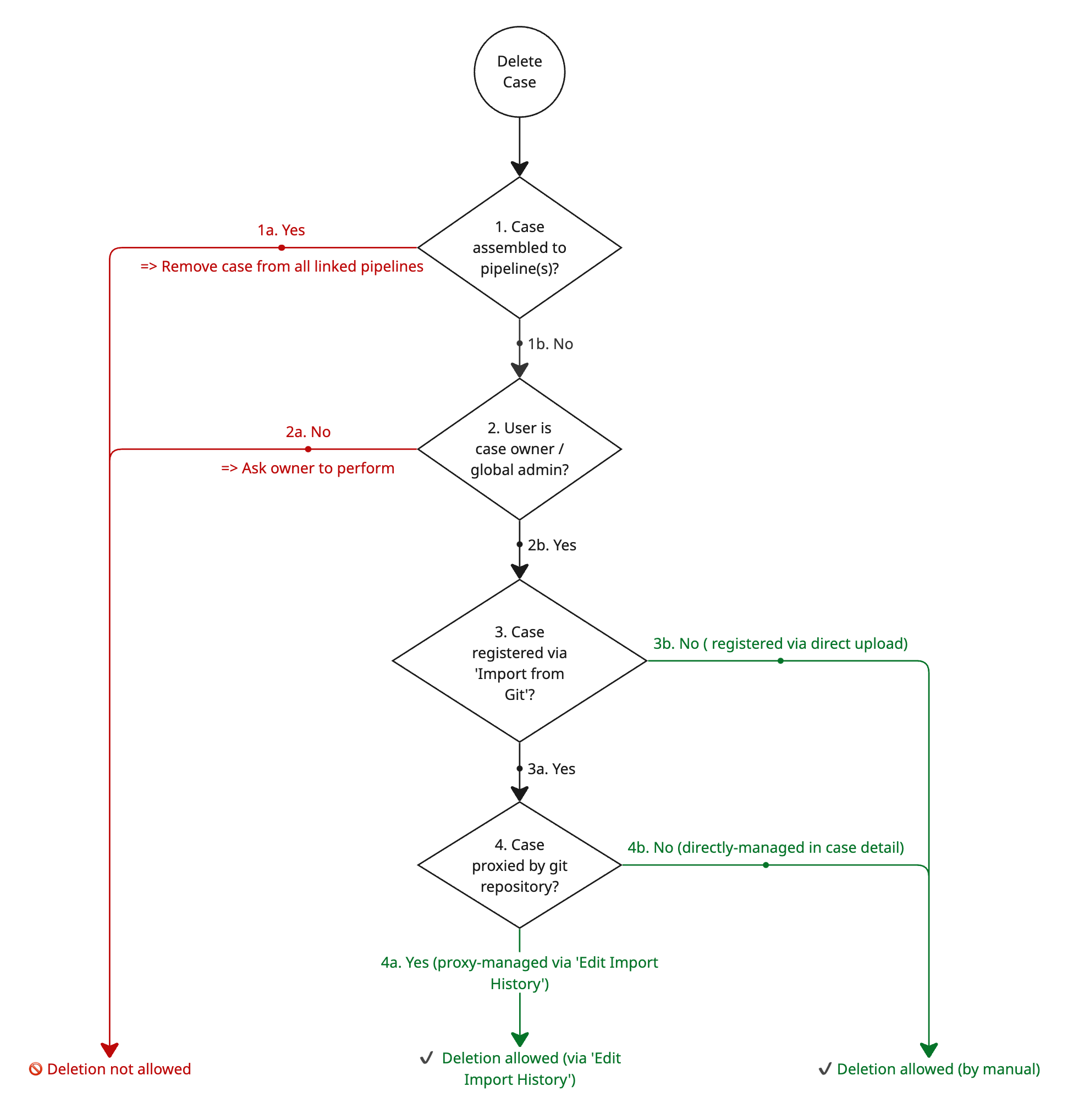

3.4 Delete a Generation Record

Deleting a generation record removes the association within Testany between the record and the registered test cases it created. It also affects the registered test cases themselves.

3.4.1 Select and Delete a Generation Record

Before deleting a generation record, carefully evaluate its impact on the test cases registered through this generation process.

Impact of deletion on registered test cases:

The corresponding test cases not assembled into any pipeline → Permanently deleted from Testany.

The corresponding test cases assembled to pipeline(s) → Will remain in Testany but will be unlinked from the Git repository. You can remove these test cases from the associated test pipelines before proceeding with this operation or manually delete them after removing the generation record.

Note: There is no impact to the generated test scripts. They remain in your Git repository.

In 'Step 1/2' of the generator wizard:

Expand the 'generation record' dropdown list.

Select the specific generation record you wish to delete.

Click the delete button (represented by a Trash icon) next to the selected item.

5. Frequently Asked Questions (FAQ)

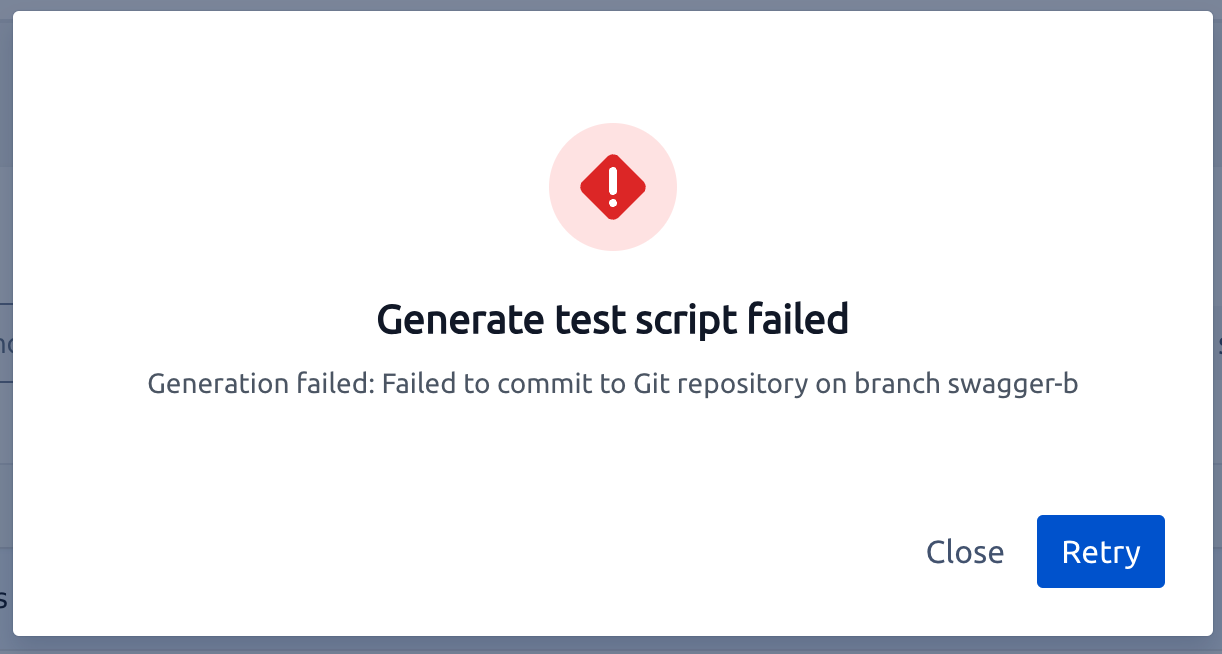

![]() Question: Why am I seeing errors during the generation process?

Question: Why am I seeing errors during the generation process?

💡 Answer: These errors typically indicate issues with accessing the source OpenAPI specification or the target Git repository. Ensure the following:

If using URL import, verify that the OpenAPI URL is correct and accessible from the Testany environment.

If the URL or repository is private, confirm that the correct Git credential (access token) was selected during configuration.

Verify that the selected credential has the required access level: read-only for fetching from a private URL (section #3.2.1.2.2) and write for committing to the repository (section #3.2.1.3).

Check the Git repository URL and branch name for any typos or incorrect formatting.

![]() Question: Why can't I delete certain test cases?

Question: Why can't I delete certain test cases?

💡 Answer: The ability to delete a test case in Testany depends on its origin and ownership. Generally:

You can delete test cases that you directly uploaded yourself.

You can delete test cases that were imported from a Git repository if you were the user who initiated the import/generation and the case is not currently linked to a test pipeline that prevents its deletion.

![]() Question: How do I track which repository and branch a specific test case came from?

Question: How do I track which repository and branch a specific test case came from?

💡 Answer: For test cases that were imported from a Git repository or generated by OpenAPI Test Case Generator, go to case detail and hover on the source or simply click the icon to open the source file.

Appendix

The following table catalogs all supported test scenarios in Testany's generation process. For each scenario, the table specifies:

Scenario ID: Unique identifier for the test scenario

Field Type: Data type the scenario applies to

Scenario: Description of the positive, negative, positive boundary test case

Required Constraints: OpenAPI specification constraints that must be defined

Skip Conditions: Circumstances under which the scenario is not generated

Scenario ID | Field Type | Scenario | Required Constraints | Skip Conditions |

|---|---|---|---|---|

1.00 | string | Valid string value matching all constraints |

| |

1.01 | string | String value not matching specified format |

|

|

1.02 | string | String value not matching specified pattern |

| |

1.03 | string | String length less than minLength |

|

|

1.04 | string | Empty string when minLength > 0 |

|

|

1.05 | string | String length greater than maxLength |

| |

1.06 | string | String value not in enum list |

|

|

1.07 | string | String value is null when nullable is false |

|

|

1.08 | string | Value type is numeric instead of string |

|

|

1.09 | string | Value type is boolean instead of string |

|

|

1.10 | string | Value type is array instead of string |

| |

1.11 | string | Value type is object instead of string |

| |

1.21 | string | This field is not present in the payload | The field is required | |

1.22 | string | String length equal to minLength |

|

|

1.23 | string | String length equal to maxLength |

|

|

2.00 | integer | Valid integer value matching all constraints | ||

2.01 | integer | Integer value not matching specified format |

|

|

2.03 | integer | Integer value less than minimum |

|

|

2.05 | integer | Integer value greater than maximum |

|

|

2.06 | integer | Integer value not in enum list |

| Any of |

2.07 | integer | Integer value is null when nullable is false |

| |

2.09 | integer | Value type is boolean instead of integer |

| |

2.10 | integer | Value type is array instead of integer |

| |

2.11 | integer | Value type is object instead of integer |

| |

2.12 | integer | Value type is float instead of integer |

| |

2.13 | integer | Integer value equals minimum when exclusiveMinimum is true |

|

|

2.15 | integer | Integer value equals maximum when exclusiveMaximum is true |

|

|

2.16 | integer | Integer value not multiple of specified number |

| Any of |

2.17 | integer | Value type is string instead of integer |

| |

2.21 | integer | This field is not present in the payload | The field is required | |

2.22 | integer | Integer value equal to minimum |

|

|

2.23 | integer | Integer value equal to maximum |

|

|

2.24 | integer | Integer value just above minimum when exclusiveMinimum is true |

|

|

2.25 | integer | Integer value just below maximum when exclusiveMaximum is true |

|

|

3.00 | boolean | Valid boolean value matching all constraints | ||

3.08 | boolean | Value type is numeric instead of boolean |

| |

3.10 | boolean | Value type is array instead of boolean |

| |

3.11 | boolean | Value type is object instead of boolean |

| |

3.17 | boolean | Value type is string instead of boolean |

| |

3.21 | boolean | This field is not present in the payload | The field is required | |

4.00 | number | Valid number value matching all constraints | ||

4.01 | number | Number value not matching specified format |

|

|

4.03 | number | Number value less than minimum |

|

|

4.05 | number | Number value greater than maximum |

|

|

4.06 | number | Number value not in enum list |

| Any of |

4.07 | number | Number value is null when nullable is false |

| |

4.09 | number | Value type is boolean instead of number |

| |

4.10 | number | Value type is array instead of number |

| |

4.11 | number | Value type is object instead of number |

| |

4.13 | number | Number minimum when exclusiveMinimum is true |

|

|

4.15 | number | Number value equals maximum when exclusiveMaximum is true |

|

|

4.16 | number | Number value not multiple of specified number |

| Any of |

4.17 | number | Value type is string instead of number |

| |

4.21 | number | This field is not present in the payload | The field is required | |

4.22 | number | Number value equal to minimum |

|

|

4.23 | number | Number value equal to maximum |

|

|

4.24 | number | Number value just above minimum when exclusiveMinimum is true |

|

|

4.25 | number | Number value just below maximum when exclusiveMaximum is true |

|

|

5.00 | array | Valid array value matching all constraints | ||

5.03 | array | Array length less than minItems |

| |

5.04 | array | Array is empty when minItems > 0 |

| |

5.05 | array | Array length greater than maxItems | ||

5.07 | array | Array value is null when nullable is false |

| |

5.08 | array | Value type is numeric instead of array |

| |

5.09 | array | Value type is boolean instead of array |

| |

5.11 | array | Value type is object instead of array |

| |

5.17 | array | Value type is string instead of array |

| |

5.18 | array | Array has duplicate items when uniqueItems is true |

| |

5.21 | array | This field is not present in the payload | The field is required | |

5.22 | array | Array length equal to minItems |

| |

5.23 | array | Array length equal to maxItems |

| |

6.00 | object | Valid object value matching all constraints | ||

6.03 | object | Object has less properties than minProperties |

| |

6.04 | object | Object is empty when minProperties > 0 |

| |

6.05 | object | Object has more properties than maxProperties |

| |

6.07 | object | Object value is null when nullable is false |

| |

6.08 | object | Value type is numeric instead of object |

| |

6.09 | object | Value type is boolean instead of object |

| |

6.10 | object | Value type is array instead of object |

| |

6.17 | object | Value type is string instead of object |

| |

6.19 | object | Object has missing required properties | The property is required | |

6.20 | object | Object has additional properties when additionalProperties is false |

| |

6.21 | object | This field is not present in the payload | The field is required | |

6.22 | object | Object length equal to minItems |

| |

6.23 | object | Object length equal to maxItems |

|

Example using test_post_pet_petId_negative_body_status.py:

If the OpenAPI definition for the status field in the POST /pet/{petId} request body is a string with specific constraints (e.g., an enum), the generated script test_post_pet_petId_negative_body_status.py will include tests corresponding to the applicable scenarios from the table above based on those constraints. For instance, if status is defined as a string enum, scenarios like 1.02 (not matching pattern, if pattern defined), 1.05 (length greater than maxLength, if maxLength defined), 1.06 (not in enum list), and type mismatches (1.08, 1.09, 1.10, 1.11) could be generated if relevant constraints are present.